4.1. Creating the Compute Cluster¶

Before creating a compute cluster, make sure the network is set up according to recommendations in Managing Networks and Traffic Types. The basic requirements are: (a) the traffic types VM private, VM public, Compute API, and VM backups must be assigned to networks; (b) the nodes to be added to the compute cluster must be connected to these networks and to the same network with the VM public traffic type.

Warning

The Compute API and VM private traffic types cannot be reassigned after the compute cluster deployment.

Important

The VM public traffic type cannot be removed from a network that has a public virtual network created on top of it.

Besides, high availability for the management node should also be enabled (see Enabling High Availability).

Also take note of the following:

- Creating the compute cluster prevents (and replaces) the use of the management node backup and restore feature.

- If nodes to be added to the compute cluster have different CPU models, consult Setting Virtual Machines CPU Model.

To create the compute cluster, open the COMPUTE screen, click Create compute cluster and do the following in the Configure compute cluster window:

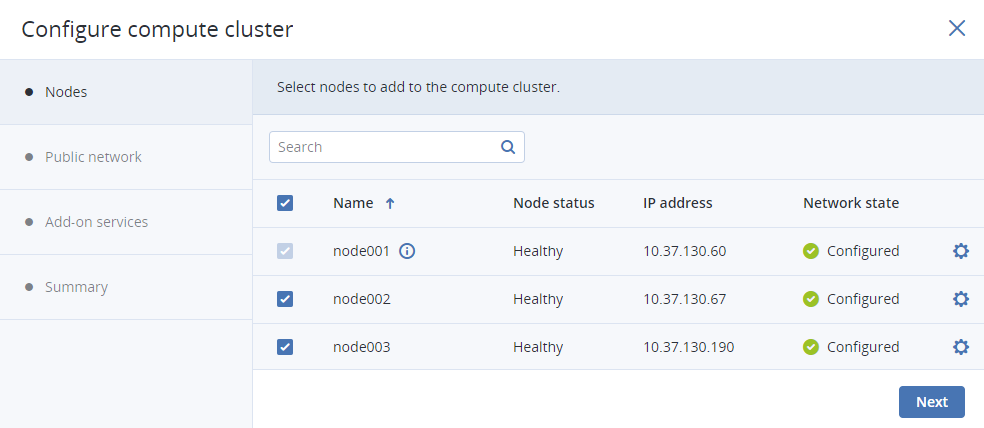

In the Nodes section, select nodes to add to the compute cluster, make sure the network state of each selected node is Configured, and click Next.

Nodes in the management node high availability cluster are automatically selected to join the compute cluster.

If node network interfaces are not configured, click the cogwheel icon, select networks as required, and click Apply.

Note

The compute cluster must have at least three nodes to allow self-service users to enable high availability for Kubernetes master nodes.

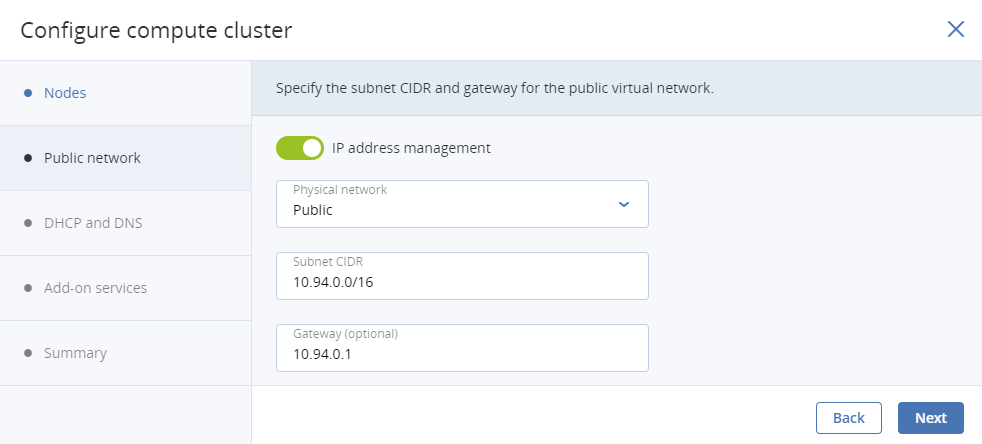

In the Public network section, enable IP address management if needed and provide the required details for the public network.

With IP address management enabled, Acronis Cyber Infrastructure will handle virtual machine IP addresses and provide the following features:

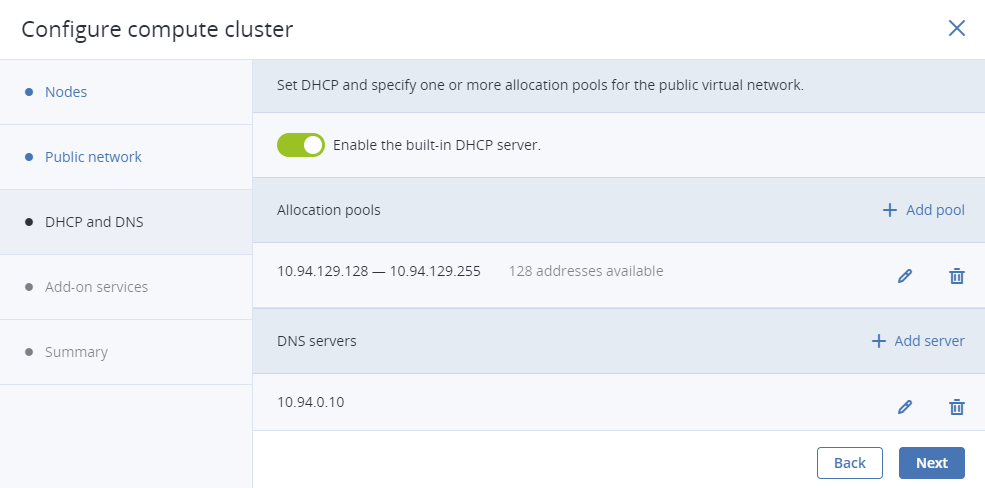

- Allocation pools. You can specify ranges of IP addresses that will be automatically assigned to VMs.

- Built-in DHCP server. Assigns IP addresses to virtual machines. With the DHCP server enabled, VM network interfaces will automatically be assigned IP addresses: either from allocation pools or, if there are no pools, from network’s entire IP range. With the DHCP server disabled, VM network interfaces will still get IP addresses, but you will have to manually assign them inside VMs.

- Custom DNS servers. You can specify DNS servers that will be used by VMs. These servers will be delivered to virtual machines via the built-in DHCP server.

With IP address management disabled:

- VMs connected to a network will be able to obtain IP addresses from DHCP servers in that network.

- Spoofing protection will be disabled for all VM network ports. Each VM network interface will accept all traffic, even frames addressed to other network interfaces.

In any case, you will be able to manually assign static IP addresses from inside VMs.

If you choose to enable IP address management, select a physical network to connect the public virtual network to and optionally specify its gateway. The subnet IP range in the CIDR format will be filled in automatically. If you choose to leave IP address management disabled, select a physical network to connect the public virtual network to.

By default, the public network will be shared between all future projects. You can disable this option on the network panel after the compute cluster is created.

The selected public network will appear in the list of virtual networks on compute cluster’s NETWORKS tab.

Click Next.

If you enabled IP address management on the previous step, you will move on to the DHCP and DNS section. In it, enable or disable the built-in DHCP server and specify one or more allocation pools and DNS servers. Click Next.

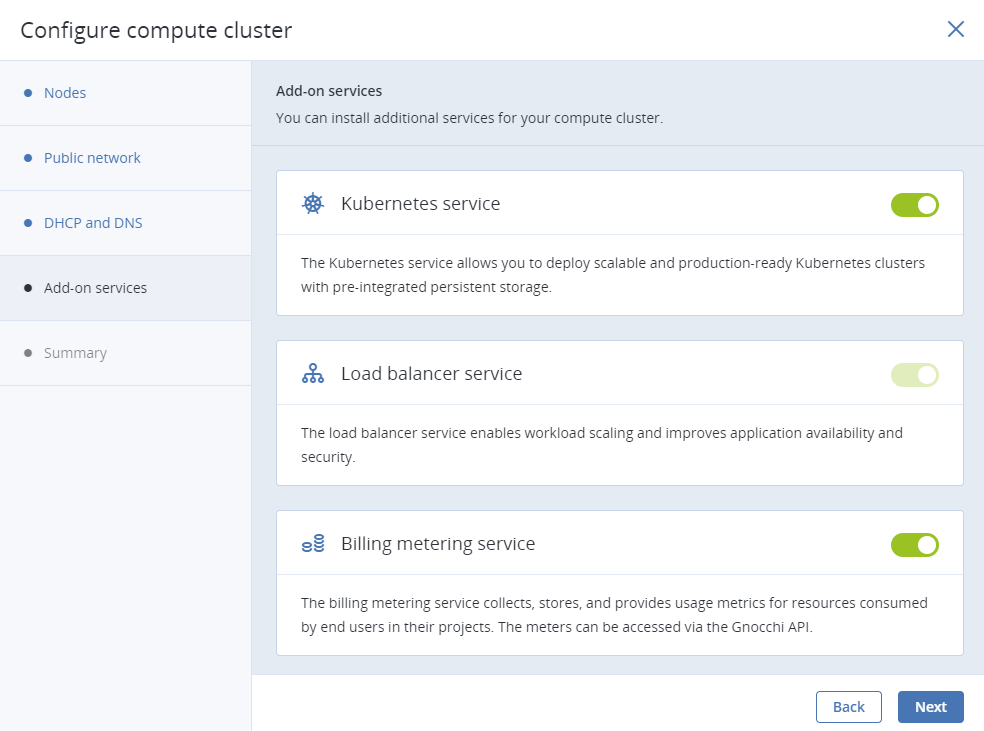

In the Add-on services section, enable additional services that will be installed during the compute cluster deployment. You can also install these services later (see Managing Add-On Services).

Important

To be able to deploy and work with Kubernetes clusters, make the following services accessible:

- the etcd discovery service at https://discovery.etcd.io—from all management nodes and the public network with the VM public traffic type

- the public Docker Hub repository at https://registry-1.docker.io—from the public network with the VM public traffic type

- the compute API—from the public network with the VM public traffic type

- the Kubernetes API at the public or floating IP address of the Kubernetes load balancer or master VM on port 6443—from all management nodes

If the Compute API traffic type is added to a private network that is inaccessible directly from the network with the VM public traffic type but exposed to public networks via NAT and available publicly via the DNS name, you need to set the DNS name for the compute API as described in Setting a DNS Name for the Compute API.

Note

Installing Kubernetes automatically installs the load balancer service as well.

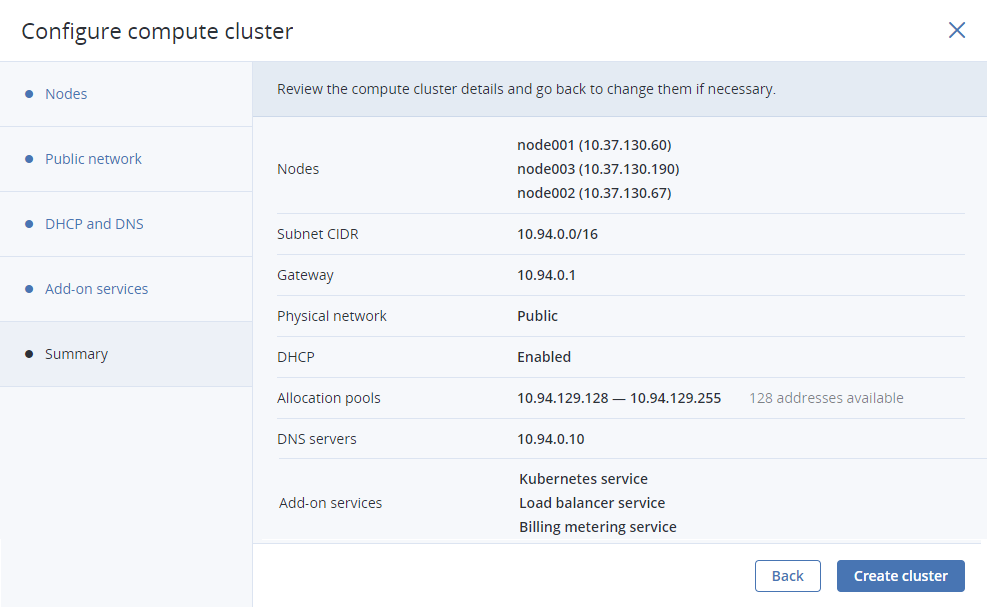

In the Summary section, review the configuration and click Create cluster.

You can monitor compute cluster deployment on the Compute screen.