2.3. Planning Network¶

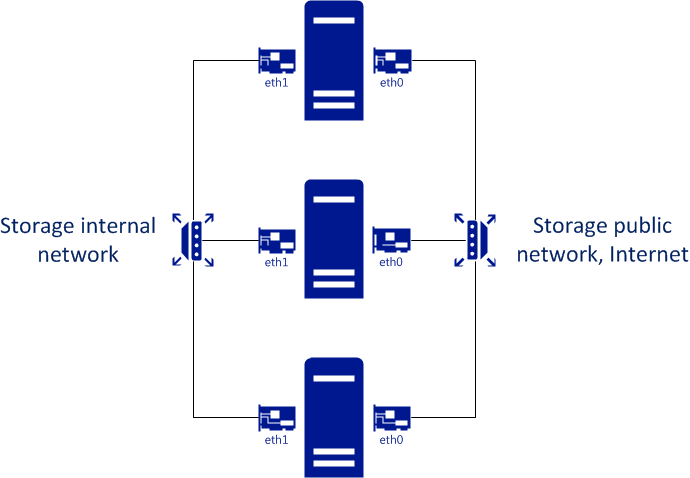

Acronis Storage uses two networks: (a) an internal network that interconnects nodes and combines them into a cluster, and (b) a public network for managing the cluster via the admin panel and SSH, exporting stored data to users, and providing external access from virtual machines.

The figure below shows a top-level overview of the internal and public networks of Acronis Storage.

2.3.1. General Network Requirements¶

Make sure that time is synchronized on all nodes in the cluster via NTP. Doing so will make it easier for the support department to understand cluster logs.

2.3.2. Network Limitations¶

- Nodes are added to clusters by their IP addresses, not FQDNs. Changing the IP address of a node in the cluster will remove that node from the cluster. If you plan to use DHCP in a cluster, make sure that IP addresses are bound to the MAC addresses of nodes’ network interfaces.

- Fibre Channel and InfiniBand networks are not supported.

- Each node must have Internet access so updates can be installed.

- MTU is set to 1500 by default.

- Network time synchronization (NTP) is required for correct statistics.

- The management traffic type is assigned automatically during installation and cannot be changed in the admin panel later.

- Even though the management node can be accessed from a web browser by the hostname, you still need to specify its IP address, not the hostname, during installation.

2.3.3. Per-Node Network Requirements¶

Network requirements for each cluster node depend on services that will run on this node:

Each node in the cluster must have access to the internal network and have the port 8888 open to listen for incoming connections from the internal network.

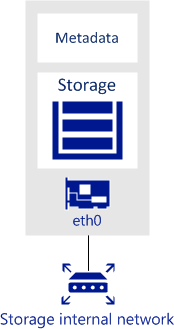

Each storage and metadata node must have at least one network interface for the internal network traffic. The IP addresses assigned to this interface must be either static or, if DHCP is used, mapped to the adapter’s MAC address. The figure below shows a sample network configuration for a storage and metadata node.

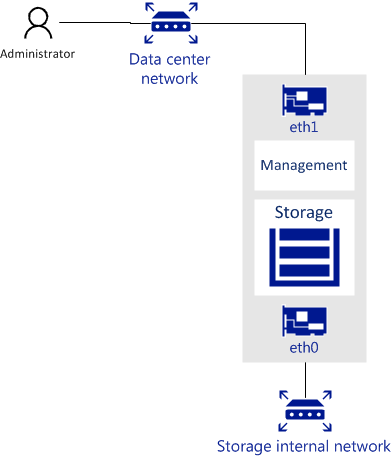

The management node must have a network interface for internal network traffic and a network interface for the public network traffic (e.g., to the datacenter or a public network) so the admin panel can be accessed via a web browser.

The management node must have the port 8888 open by default to allow access to the admin panel from the public network and to the cluster node from the internal network.

The figure below shows a sample network configuration for a storage and management node.

A node that runs one or more storage access point services must have a network interface for the internal network traffic and a network interface for the public network traffic.

The figure below shows a sample network configuration for a node with an iSCSI access point. iSCSI access points use the TCP port 3260 for incoming connections from the public network.

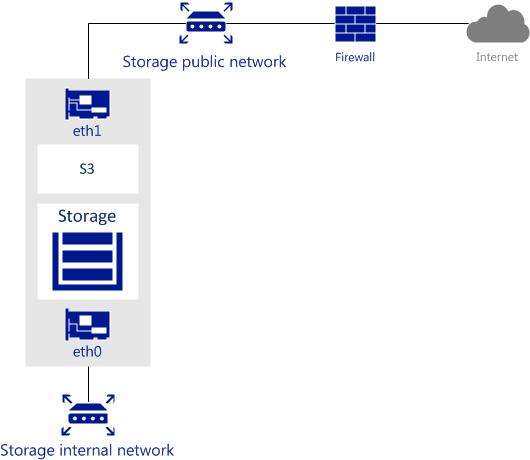

The next figure shows a sample network configuration for a node with an S3 storage access point. S3 access points use ports 443 (HTTPS) and 80 (HTTP) to listen for incoming connections from the public network.

In the scenario pictured above, the internal network is used for both the storage and S3 cluster traffic.

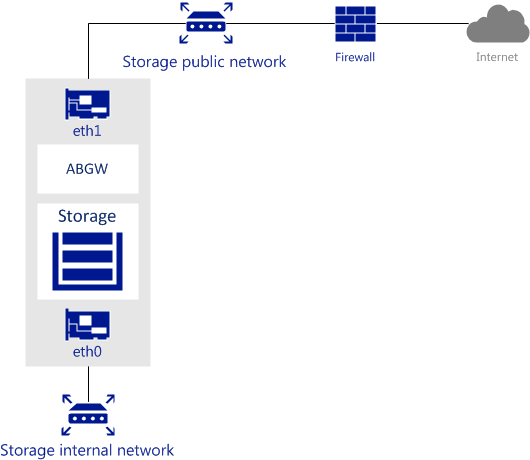

The next figure shows a sample network configuration for a node with an Acronis Backup Gateway storage access point. Acronis Backup Gateway access points use port 44445 for incoming connections from both internal and public networks and ports 443 and 8443 for outgoing connections to the public network.

2.3.4. Network Recommendations for Clients¶

The following table lists the maximum network performance a client can get with the specified network interface. The recommendation for clients is to use 10Gbps network hardware between any two cluster nodes and minimize network latencies, especially if SSD disks are used.

| Storage network interface | Node max. I/O | VM max. I/O (replication) | VM max. I/O (erasure coding) |

|---|---|---|---|

| 1 Gbps | 100 MB/s | 100 MB/s | 70 MB/s |

| 2 x 1 Gbps | ~175 MB/s | 100 MB/s | ~130 MB/s |

| 3 x 1 Gbps | ~250 MB/s | 100 MB/s | ~180 MB/s |

| 10 Gbps | 1 GB/s | 1 GB/s | 700 MB/s |

| 2 x 10 Gbps | 1.75 GB/s | 1 GB/s | 1.3 GB/s |

2.3.5. Sample Network Configuration¶

The figure below shows an overview of a sample Acronis Storage network.

In this network configuration:

The Acronis Storage internal network is a network that interconnects all servers in the cluster. It can be used for the management, storage, and S3 internal services. Each of these services can be moved to a separate dedicated internal network to ensure high performance under heavy workloads.

This network cannot be accessed from the public network. All servers in the cluster are connected to this network.

Important

Acronis Storage does not offer protection from traffic sniffing. Anyone with access to the internal network can capture and analyze the data being transmitted.

The Acronis Storage public network is a network over which the storage space is exported. Depending on where the storage space is exported to, it can be an internal datacenter network or an external public network:

- An internal datacenter network can be used to manage Acronis Storage and export the storage space over iSCSI to other servers in the datacenter.

- An external public network can be used to export the storage space to the outside services through S3 and Acronis Backup Gateway storage access points.