2.5. Planning the network¶

The network configuration for Acronis Cyber Infrastructure depends on the services you plan to deploy.

If you want to use only the Backup Gateway and storage services, configure two networks, for internal and external traffic:

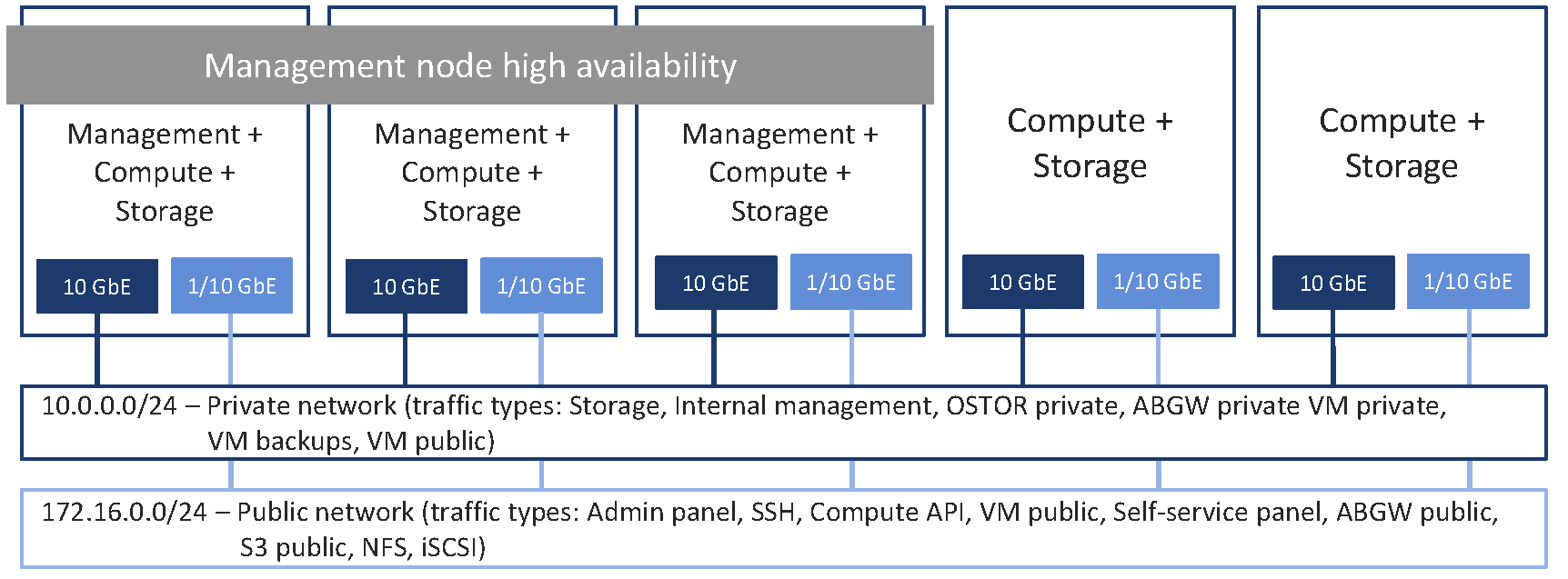

If you plan to deploy the compute service on top of the storage cluster, you can create a minimum network configuration for evaluation purposes, or expand it to an advanced network configuration, which is recommended for production.

The minimum configuration includes two networks, for internal and external traffic:

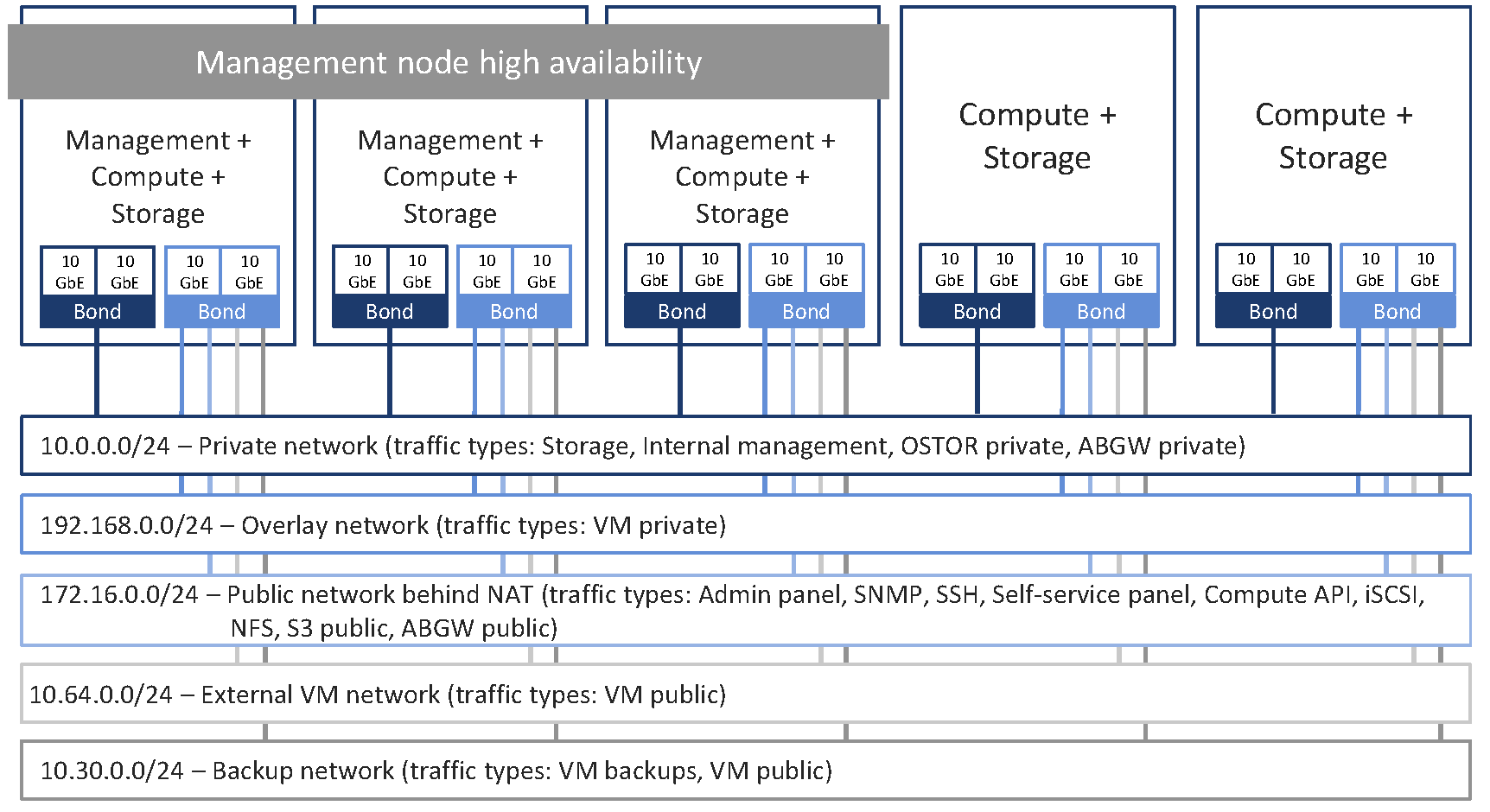

The recommended configuration expands to five networks connected to the following logical network interfaces:

One bonded connection for internal management and storage traffic

一个绑定连接,通过它提供四个 VLAN:

For overlay network traffic between VMs

For management via the admin and self-service panels, compute API, SSH, and SNMP, as well as for public export of iSCSI, NFS, S3, and Backup Gateway data

For external VM traffic

For pulling VM backups by third-party backup management systems

2.5.1. General network requirements¶

内部存储流量必须独立于其他流量类型。

The network for internal traffic can be non-routable, with minimum 10 Gbit/s bandwidth.

2.5.2. Network limitations¶

Nodes are added to clusters by their IP addresses, not FQDNs. Changing the IP address of a node in the cluster will remove that node from the cluster. If you plan to use DHCP in a cluster, make sure that IP addresses are bound to the MAC addresses of the nodes’ network interfaces.

Each node must have Internet access so that updates can be installed.

The MTU value is set to 1500 by default. Refer to Step 2: Configuring the network for information on setting an optimal MTU value.

Network time synchronization is required for correct statistics. It is enabled by default via the

chronydservice. If you want to usentpdateorntpd, stop and disablechronydfirst.在安装期间自动指派内部管理流量类型,以后无法在管理面板中更改。

Even though the management node can be accessed from a web browser by the host name, you still need to specify its IP address, not the host name, during installation.

2.5.3. Per-node network requirements and recommendations¶

节点上的所有网络接口必须连接到不同的子网。网络接口可以是 VLAN 标记的逻辑接口、未标记的绑定或以太网链路。

Even though cluster nodes have the necessary

iptablesrules configured, we recommend using an external firewall for untrusted public networks, such as the Internet.将在群集节点上打开的端口取决于将在相应节点上运行的服务以及与之关联的流量类型。在群集节点上启用特定服务之前,需要将相应的流量类型指派给该节点连接到的网络。将流量类型指派给网络将在连接到此网络的节点上配置防火墙、在节点网络接口上打开特定端口以及设置必要的

iptables规则。下表列出了所有必需的端口和与其相关联的服务:

表 2.5.3.1 在群集节点上打开端口¶ 服务

流量类型

端口

说明

Web 控制面板

管理面板*

TCP 8888

对管理面板的外部访问。

自助服务面板

TCP 8800

对自助服务面板的外部访问。

管理

内部管理

Any available port

内部簇管理和节点监控数据到管理面板的传输。

元数据服务

存储器

Any available port

MDS 服务之间以及与区块服务和客户端的内部通信。

区块服务

Any available port

与 MDS 服务和客户端的内部通信。

客户端

Any available port

与 MDS 和区块服务的内部通信。

备份网关

ABGW 公共

TCP 44445

External data exchange with Acronis Backup agents and Acronis Cyber Backup Cloud.

ABGW 专用

Any available port

多个 Backup Gateway 服务之间的内部管理和数据交换。

iSCSI

iSCSI

TCP 3260

使用 iSCSI 访问点的外部数据交换。

S3

S3 公共

TCP 80、443

使用 S3 访问点的外部数据交换。

OSTOR 专用

Any available port

多个 S3 服务之间的内部数据交换。

NFS

NFS

TCP/UDP 111、892、2049

使用 NFS 访问点的外部数据交换。

OSTOR 专用

Any available port

多个 NFS 服务之间的内部数据交换。

计算

计算 API*

对标准 OpenStack API 端点的外部访问:

TCP 5000

身份 API v3

TCP 6080

noVNC Websocket 代理服务器

TCP 8004

编排服务 API v1

TCP 8041

Gnocchi API(计费计量服务)

TCP 8774

计算 API

TCP 8776

块存储 API v3

TCP 8780

位置 API

TCP 9292

映像服务 API v2

TCP 9313

密钥管理器 API v1

TCP 9513

容器基础架构管理 API(Kubernetes 服务)

TCP 9696

网络连接 API v2

TCP 9888

Octavia API v2(负载均衡器服务)

VM 专用

UDP 4789

Network traffic between VMs in compute virtual networks.

TCP 5900-5999

VNC 中控台流量。

VM 备份

TCP 49300-65535

对 NBD 端点的外部访问。

SSH

SSH

TCP 22

通过 SSH 对节点的远程访问。

SNMP

SNMP*

UDP 161

通过 SNMP 协议对存储簇监控统计资料的外部访问。

* 这些流量类型的端口只能在管理节点上打开。

2.5.4. Kubernetes network requirements¶

为了能够在计算簇中部署 Kubernetes 簇并使用它们,请确保您的网络配置允许计算服务和 Kubernetes 服务发送以下网络请求:

The request to bootstrap the etcd cluster in the public discovery service - from all management nodes to https://discovery.etcd.io via the public network.

The request to obtain the “kubeconfig” file - from all management nodes via the public network:

If high availability (HA) for the master VM is enabled, the request is sent to the public or floating IP address of the load balancer VM associated with Kubernetes API on port 6443.

如果禁用了主 VM 的 HA,则请求将发送到端口 6443 上的 Kubernetes 主 VM 的公共或浮动 IP 地址。

Requests from Kubernetes master VMs to the compute APIs (the Compute API traffic type) via the network with the VM public traffic type (via a publicly available VM network interface or a virtual router with enabled SNAT). By default, the compute API is exposed via the IP address of the management node (or to its virtual IP address if high availability is enabled). But you can also access the compute API via a DNS name (refer to Setting a DNS name for the compute API).

The request to update the etcd cluster member state in the public discovery service - from Kubernetes master VMs to https://discovery.etcd.io via the network with the VM public traffic type (via a publicly available VM network interface or a virtual router with enabled SNAT).

The request to download container images from the public Docker Hub repository - from Kubernetes master VMs to https://registry-1.docker.io via the network with the VM public traffic type (via a publicly available VM network interface or a virtual router with enabled SNAT).

它还要求在其中创建 Kubernetes 簇的网络与以下默认网络不重叠:

10.100.0.0/24—Used for pod-level networking

10.254.0.0/16—Used for allocating Kubernetes cluster IP addresses

2.5.5. Network recommendations for clients¶

The following table lists the maximum network performance a client can get with the specified network interface. The recommendation for clients is to use 10 Gbps network hardware between any two cluster nodes and minimize network latencies, especially if SSD disks are used.

存储网络接口 |

节点最大性能I/O |

VM 最大性能I/O(复制) |

VM 最大性能I/O(擦除编码) |

|---|---|---|---|

1 Gbps |

100 MB/s |

100 MB/s |

70 MB/s |

2 x 1 Gbps |

~175 MB/s |

100 MB/s |

~130 MB/s |

3 x 1 Gbps |

~250 MB/s |

100 MB/s |

~180 MB/s |

10 Gbps |

1 GB/s |

1 GB/s |

700 MB/s |

2 x 10 Gbps |

1.75 GB/s |

1 GB/s |

1.3 GB/s |