2.5. Replacing Node Disks¶

When you replace a failed disk in a storage cluster node, you need to assign the same roles to it. Most roles need to be assigned by hand. For Storage disks (CS), however, this can be done automatically to speed up replacement.

2.5.1. Replacing Storage Disks¶

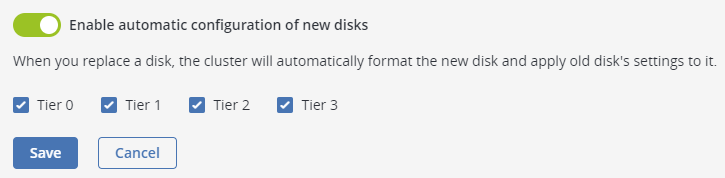

To have the Storage role assigned to replacement CS disks automatically, do the following:

- Navigate to SETTINGS > Advanced settings > DISK.

- Enable Enable automatic configuration of new disks.

- Optionally, select tiers to scan for failed disks. If a disk on an unselected tier breaks down, you will have to assign roles to its replacement manually.

- Click Save.

From now on, when you replace a failed Storage disk, the new disk will be detected, formatted, assigned the same role, and placed on the same tier (if applicable). You will see the result on node’s Disks screen.

Take note of the following:

- If the new disk is larger than the old one, it will be assigned the Storage role.

- If the new disk is smaller than the old one, it will not be assigned the Storage role. Instead, you will be alerted about the size difference and will have to assign the role manually (or change the disk to a larger one).

- If the new disk is of a different type than the old one (e.g., if you replaced an SSD with an HDD or vice versa), it will not be assigned the Storage role. Instead, you will be alerted about the type difference and will have to assign the role manually (or change the disk to one of the needed type).

- If you enable this feature after a disk fails, its replacement will not be assigned the Storage role.

- If you accidentally remove and then re-attach a healthy Storage disk, its data will be reused.

- If you add a disk that does not replace any failed ones, it will not be assigned the Storage role.

- If you add a disk and one of the CSes is inactive or offline, the disk will be assigned the Storage role and a new CS will be created.

- If you attach multiple replacement disks at once, the Storage role will be assigned to them in no particular order, as long as their size and type fit. They will also be assigned to correct tiers, if applicable.

2.5.2. Replacing Disks Manually¶

To manually replace a disk and assign roles to the new one, do the following:

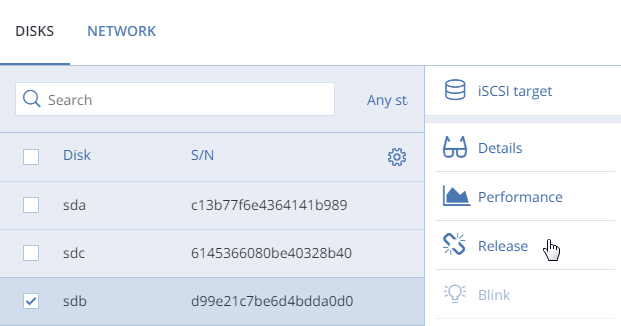

Open INFRASTRUCTURE > Nodes > <node> > Disks.

Select the failed disk, click Release.

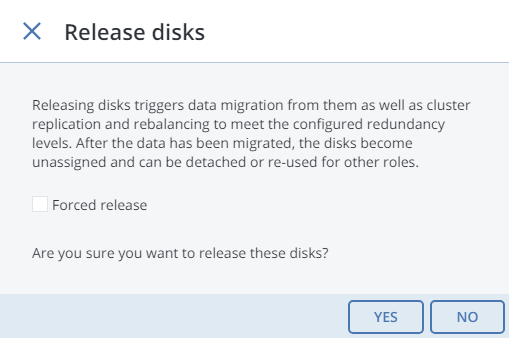

In the Release disk window, click YES.

Replace the disk with a new one.

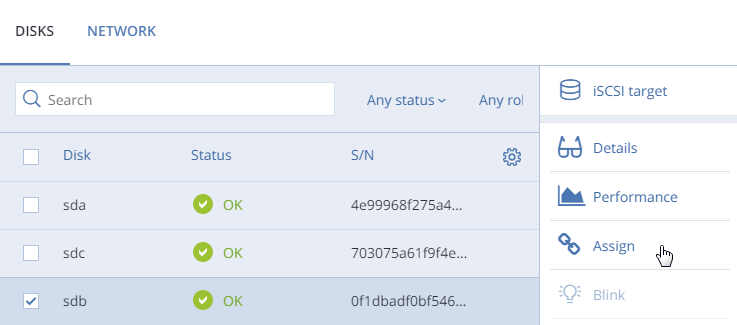

Back on INFRASTRUCTURE > Nodes > <node> > Disks, select the unassigned disk and click Assign.

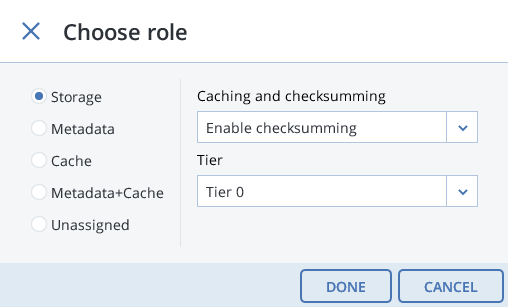

On the Choose role screen, select a disk role:

Storage. Use the disk to store chunks and run a chunk service on the node. From the Caching and checksumming drop-down list, select one of the following:

- Use SSD for caching and checksumming. Available and recommended only for nodes with SSDs.

- Enable checksumming (default). Recommended for nodes with HDDs as it provides better reliability.

- Disable checksumming. Not recommended for production. For an evaluation or testing environment, you can disable checksumming for nodes with HDDs, to provide better performance.

Data caching improves cluster performance by placing the frequently accessed data on an SSD.

Data checksumming generates checksums each time some data in the cluster is modified. When this data is then read, a new checksum is computed and compared with the old checksum. If the two are not identical, a read operation is performed again, thus providing better data reliability and integrity.

If a node has an SSD, it will be automatically configured to keep checksums when you add a node to a cluster. This is the recommended setup. However, if a node does not have an SSD drive, checksums will be stored on a rotational disk by default. It means that this disk will have to handle double the I/O, because for each data read/write operation there will be a corresponding checksum read/write operation. For this reason, you may want to disable checksumming on nodes without SSDs to gain performance at the expense of checksums. This can be especially useful for hot data storage.

To add an SSD to a node that is already in the cluster (or replace a broken SSD), you will need to release the node from the cluster, attach the SSD, choose to join the node to the cluster again, and, while doing so, select Use SSD for caching and checksumming for each disk with the role Storage.

With the Storage role, you can also select a tier from the Tier drop-down list. To make better use of data redundancy, do not assign all the disks on a node to the same tier. Instead, make sure that each tier is evenly distributed across the cluster with only one disk per node assigned to it. For more information, see the Installation Guide.

Note

If the disk contains old data that was not placed there by Acronis Cyber Infrastructure, the disk will not be considered suitable for use in Acronis Cyber Infrastructure.

Metadata. Use the disk to store metadata and run a metadata service on the node.

Cache. Use the disk to store write cache. This role is only for SSDs. To cache a specific storage tier, select it from the drop-down list. Otherwise, all tiers will be cached.

Metadata+Cache. A combination of two roles described above.

Unassigned. Remove the roles from the disk.

Take note of the following:

- If a physical server has a system disk with the capacity greater than 100GB, that disk can be additionally assigned the Metadata or Storage role. In this case, a physical server can have at least 2 disks.

- It is recommended to assign the System+Metadata role to an SSD. Assigning both these roles to an HDD will result in mediocre performance suitable only for cold data (e.g., archiving).

- The System role cannot be combined with the Cache and Metadata+Cache roles. The reason is that is I/O generated by the operating system and applications would contend with I/O generated by journaling, negating its performance benefits.

Click Done.

The disk will be assigned the chosen role and added to the cluster.