About the storage cluster

The storage cluster provides the most efficient usage of the hardware with erasure coding, integrated SSD caching, automatic load balancing, and RDMA/InfiniBand support. The cluster space can be used for:

- iSCSI block storage (hot data and virtual machines)

- S3 object storage (protected with Acronis Notary blockchain and with geo-replication between datacenters)

- File storage (NFS)

In addition, Acronis Cyber Infrastructure is integrated with Acronis Cyber Protection solutions for storing backups in the cluster, sending them to cloud services (like Google Cloud, Microsoft Azure, and AWS S3), or storing them on NAS via the NFS protocol. Geo-replication is available for Backup Gateways set up on different storage backends: a local storage cluster, NFS share, or public cloud.

Data storage policies can be customized to meet various use cases: each data volume can have a specific redundancy mode, storage tier, and failure domain. Moreover, the data can be encrypted with the AES-256 standard.

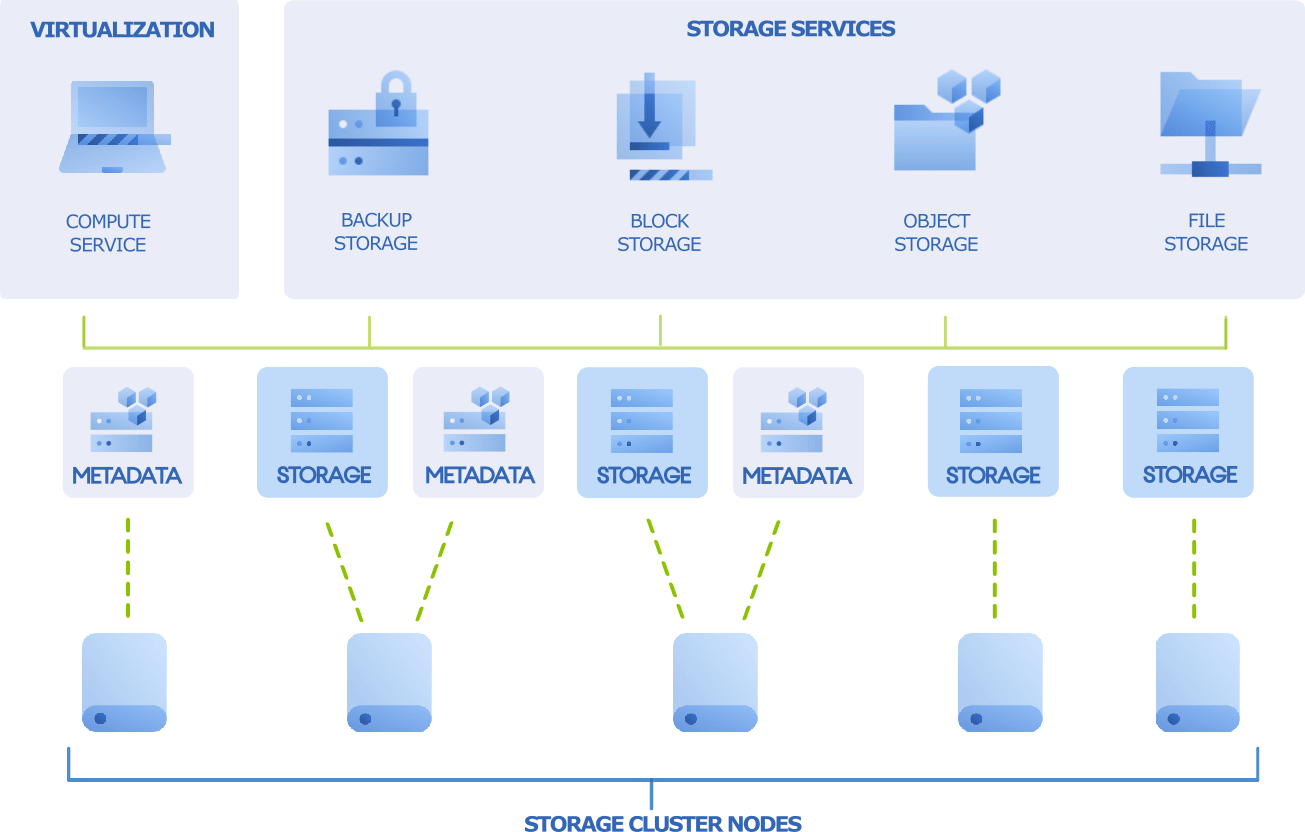

Storage cluster architecture

The fundamental component of Acronis Cyber Infrastructure is a storage cluster, a group of physical servers interconnected by the network. The core storage comprises server disks, which are assigned one or more roles. Typically, each server in the cluster runs core storage services that correspond to the following disk roles:

-

Metadata

Metadata

Metadata nodes run metadata services (MDS), store cluster metadata, and control how user files are split into chunks and where these chunks are located. Metadata nodes also ensure that chunks have the required amount of replicas. Finally, they log all important events that happen in the cluster. To provide system reliability, Acronis Cyber Infrastructure uses the Paxos consensus algorithm. It guarantees fault-tolerance if the majority of nodes running metadata services are healthy.

To ensure high availability of metadata in a production environment, metadata services must be run on at least three cluster nodes. In this case, if one metadata service fails, the remaining two will still be controlling the cluster. However, it is recommended to have at least five metadata services to ensure that the cluster can survive simultaneous failure of two nodes and without data loss.

The primary metadata node is the master node in the metadata quorum. If the master MDS fails, another available MDS is selected as master.

-

Storage

Storage

Storage nodes run chunk services (CS), store all data in the form of fixed-size chunks, and provide access to these chunks. All data chunks are replicated and the replicas are kept on different storage nodes to achieve high availability of data. If one of the storage nodes fails, the remaining healthy storage nodes continue providing the data chunks that were stored on the failed node. The storage role can only be assigned to a server with disks of a certain capacity.

Storage nodes can also benefit from data caching and checksumming:

- Data caching improves cluster performance by placing frequently accessed data on an SSD.

-

Data checksumming generates checksums each time some data in the cluster is modified. When this data is then read, a new checksum is computed and compared with the old checksum. If the two are not identical, a read operation is performed again, thus providing better data reliability and integrity.

If a node has an SSD, it will be automatically configured to keep checksums when you add a node to a cluster. This is the recommended setup. However, if a node does not have an SSD drive, checksums will be stored on a rotational disk by default. It means that this disk will have to handle double the I/O, because for each data read/write operation there will be a corresponding checksum read/write operation. For this reason, you may want to disable checksumming on nodes without SSDs to gain performance at the expense of checksums. This can be especially useful for hot data storage.

- Supplementary roles:

-

SSD journal and cache

SSD journal and cache

Boosts chunk read/write performance by creating write caches on selected solid-state drives (SSDs). It is also recommended to use such SSDs for metadata. The use of write journals may more than double the write speed in the cluster.

-

System

System

One disk per node that is reserved for the operating system and unavailable for data storage.

Note the following:

- The System role cannot be unassigned from a disk.

- If a physical server has a system disk with the capacity greater than 100 GB, that disk can be additionally assigned the Metadata or Storage role.

- It is recommended to assign the System+Metadata role to an SSD. Assigning both of these roles to an HDD will result in mediocre performance suitable only for cold data (for example, archiving).

- The System role cannot be combined with the Cache and Metadata+Cache roles. The reason is that the I/O generated by the operating system and applications would contend with the I/O generated by journaling, thus negating its performance benefits.

Along with the core storage services, servers run storage access points that allow top-level virtualization and storage services to access the storage cluster.

In addition, a server joined to the storage cluster can run neither metadata nor chunk services. In this case, the node will run only storage access points and serve as a storage cluster client.