2.5. Planning Network¶

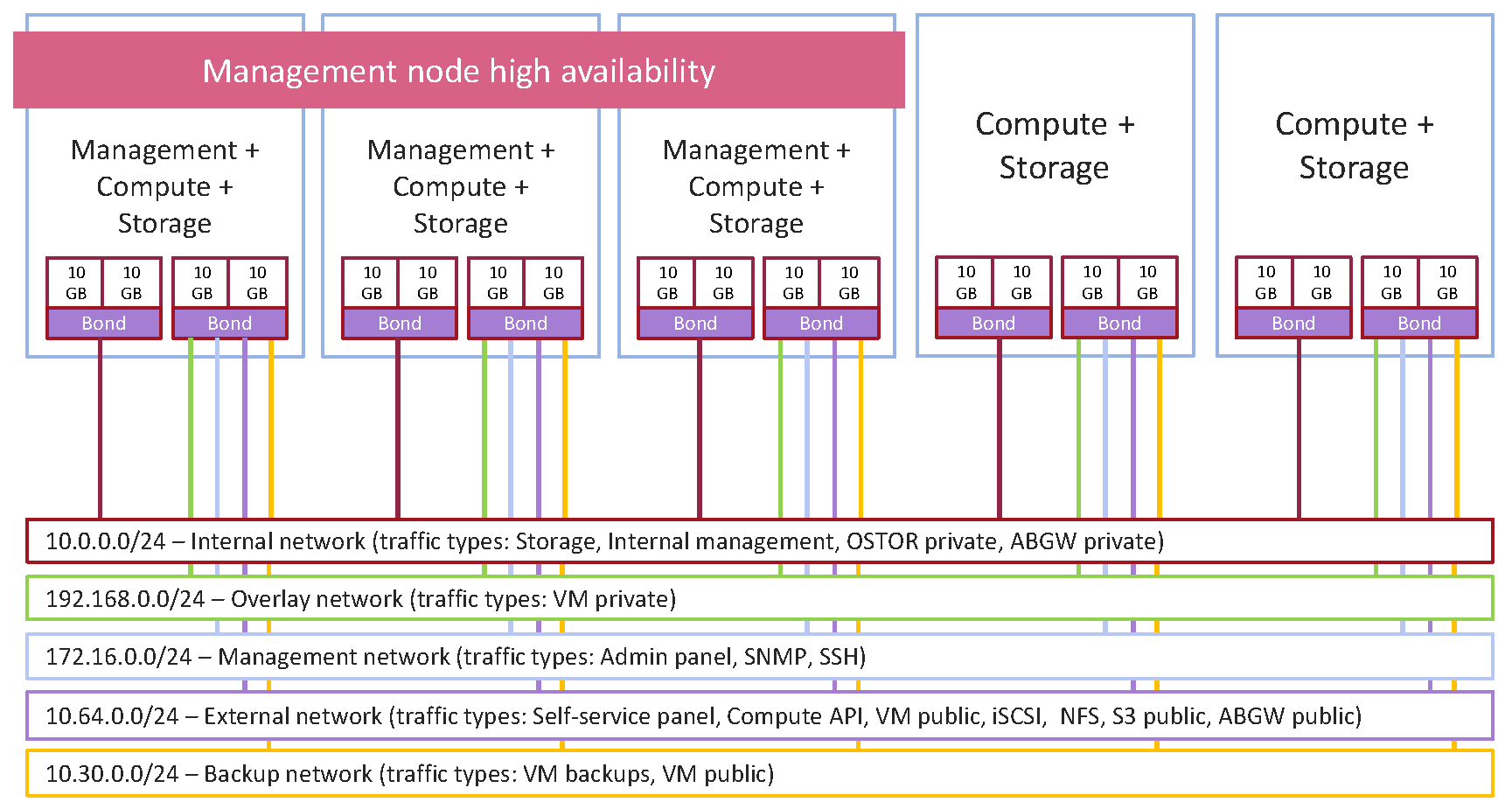

The recommended network configuration for Acronis Cyber Infrastructure is as follows:

- One bonded connection for internal management and storage traffic;

- One bonded connection with four VLANs over it:

- for overlay network traffic between VMs

- for management via the admin panel, SSH, and SNMP

- for external access to and from the self-service panel, compute API, and VMs, as well as for public export of iSCSI, NFS, S3, and Backup Gateway data

- for pulling VM backups by third-party backup management systems

2.5.1. General Network Requirements¶

- Internal storage traffic must be separated from other traffic types.

2.5.2. Network Limitations¶

- Nodes are added to clusters by their IP addresses, not FQDNs. Changing the IP address of a node in the cluster will remove that node from the cluster. If you plan to use DHCP in a cluster, make sure that IP addresses are bound to the MAC addresses of nodes’ network interfaces.

- Each node must have Internet access so updates can be installed.

- The MTU value is set to 1500 by default. See Step 2: Configuring the Network for information on setting an optimal MTU value.

- Network time synchronization (NTP) is required for correct statistics. It is enabled by default using the

chronydservice. If you want to usentpdateorntpd, stop and disablechronydfirst. - The Internal management traffic type is assigned automatically during installation and cannot be changed in the admin panel later.

- Even though the management node can be accessed from a web browser by the hostname, you still need to specify its IP address, not the hostname, during installation.

2.5.3. Per-Node Network Requirements and Recommendations¶

All network interfaces on a node must be connected to different subnets. A network interface can be a VLAN-tagged logical interface, an untagged bond, or an Ethernet link.

Even though cluster nodes have the necessary

iptablesrules configured, we recommend to use an external firewall for untrusted public networks, such as the Internet.Ports that will be opened on cluster nodes depend on services that will run on the node and traffic types associated with them. Before enabling a specific service on a cluster node, you need to assign the respective traffic type to a network this node is connected to. Assigning a traffic type to a network configures a firewall on nodes connected to this network, opens specific ports on node network interfaces, and sets the necessary

iptablesrules.The table below lists all the required ports and services associated with them:

Table 2.5.3.1 Open ports on cluster nodes¶ Service Traffic type Port Description Web control panel Admin panel* TCP 8888 External access to the admin panel. Self-service panel TCP 8800 External access to the self-service panel. Management Internal management any available port Internal cluster management and transfers of node monitoring data to the admin panel. Metadata service Storage any available port Internal communication between MDS services, as well as with chunks services and clients. Chunk service any available port Internal communication with MDS services and clients. Client any available port Internal communication with MDS and chunk services. Backup Gateway ABGW public TCP 44445 External data exchange with Acronis Backup agents and Acronis Backup Cloud. ABGW private any available port Internal management of and data exchange between multiple Backup Gateway services. iSCSI iSCSI TCP 3260 External data exchange with the iSCSI access point. S3 S3 public TCP 80, 443 External data exchange with the S3 access point. OSTOR private any available port Internal data exchange between multiple S3 services. NFS NFS TCP/UDP 111, 892, 2049 External data exchange with the NFS access point. OSTOR private any available port Internal data exchange between multiple NFS services. Compute Compute API* External access to standard OpenStack API endpoints: TCP 5000 Identity API v3 TCP 6080 noVNC Websocket Proxy TCP 8004 Orchestration Service API v1 TCP 8041 Gnocchi API (billing metering service) TCP 8774 Compute API TCP 8776 Block Storage API v3 TCP 8780 Placement API TCP 9292 Image Service API v2 TCP 9313 Key Manager API v1 TCP 9513 Container Infrastructure Management API (Kubernetes service) TCP 9696 Networking API v2 TCP 9888 Octavia API v2 (load balancer service) VM private UDP 4789 Network traffic between VMs in private virtual networks. TCP 5900-5999 VNC console traffic. VM backups TCP 49300-65535 External access to NBD endpoints. SSH SSH TCP 22 Remote access to nodes via SSH. SNMP SNMP* UDP 161 External access to storage cluster monitoring statistics via the SNMP protocol. * Ports for these traffic types must only be open on management nodes.

2.5.4. Kubernetes Network Requirements¶

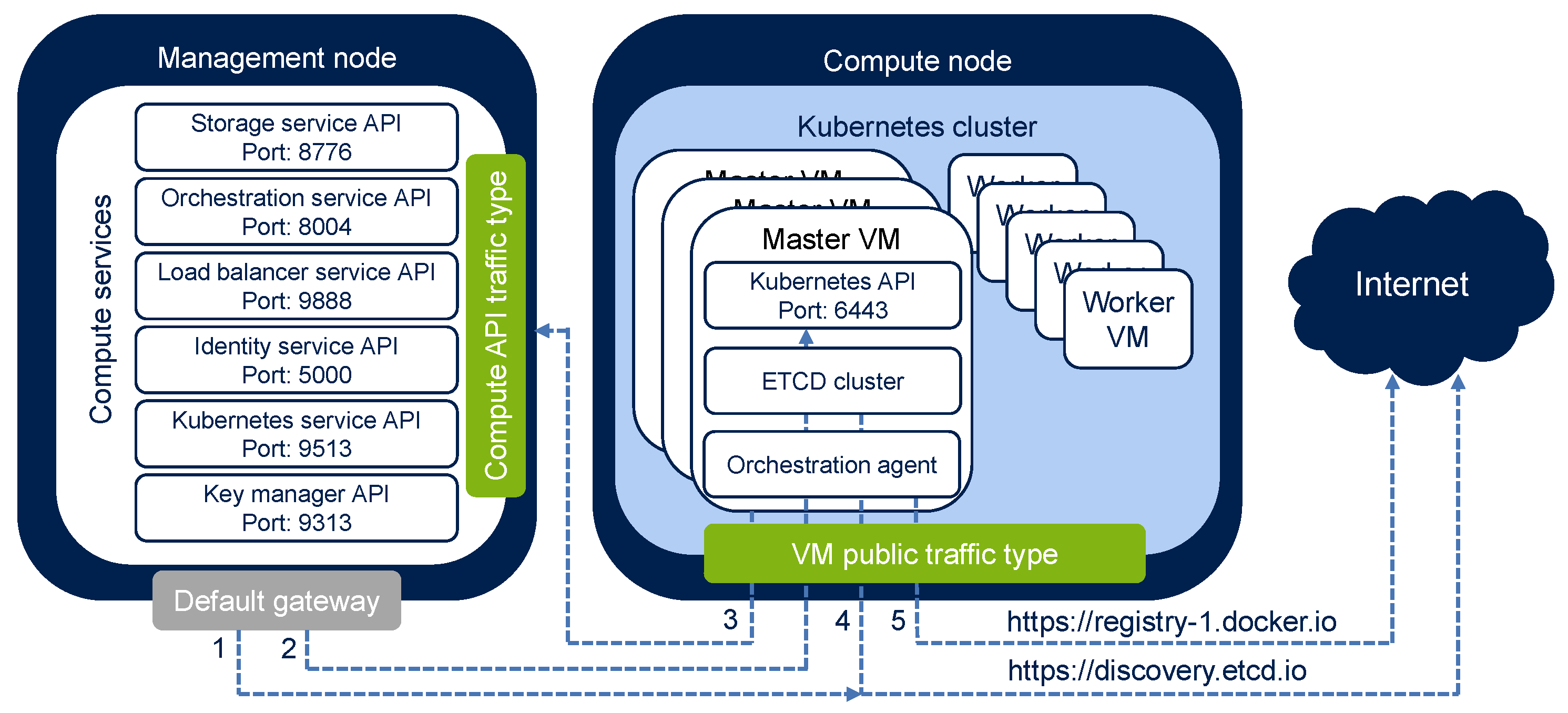

To be able to deploy Kubernetes clusters in the compute cluster and work with them, make sure your network configuration allows the compute and Kubernetes services to send the following network requests:

- The request to bootstrap the etcd cluster in the public discovery service—from all management nodes to https://discovery.etcd.io via the public network.

- The request to obtain the “kubeconfig” file—from all management nodes via the public network:

- If HA for the master VM is enabled, the request is sent to the public or floating IP address of the load balancer VM associated with Kubernetes API on port 6443.

- If HA for the master VM is disabled, the request is sent to the public or floating IP address of the Kubernetes master VM on port 6443.

- Requests from Kubernetes master VMs to the compute APIs (the Compute API traffic type) via the network with the VM public traffic type (via a publicly available VM network interface or a virtual router with enabled SNAT). By default, the compute API is exposed via the IP address of the management node (or to its virtual IP address if high availability is enabled). But you can also access the compute API via a DNS name (refer to Setting a DNS Name for the Compute API).

- The request to update etcd cluster member state in the public discovery service—from Kubernetes master VMs to https://discovery.etcd.io via the network with the VM public traffic type (via a publicly available VM network interface or a virtual router with enabled SNAT).

- The request to download container images from the public Docker Hub repository—from Kubernetes master VMs to https://registry-1.docker.io via the network with the VM public traffic type (via a publicly available VM network interface or a virtual router with enabled SNAT).

It is also required that the network where you create a Kubernetes cluster does not overlap with these default networks:

- 10.100.0.0/24—used for pod-level networking

- 10.254.0.0/16—used for allocating Kubernetes cluster IP addresses from

2.5.5. Network Recommendations for Clients¶

The following table lists the maximum network performance a client can get with the specified network interface. The recommendation for clients is to use 10Gbps network hardware between any two cluster nodes and minimize network latencies, especially if SSD disks are used.

| Storage network interface | Node max. I/O | VM max. I/O (replication) | VM max. I/O (erasure coding) |

|---|---|---|---|

| 1 Gbps | 100 MB/s | 100 MB/s | 70 MB/s |

| 2 x 1 Gbps | ~175 MB/s | 100 MB/s | ~130 MB/s |

| 3 x 1 Gbps | ~250 MB/s | 100 MB/s | ~180 MB/s |

| 10 Gbps | 1 GB/s | 1 GB/s | 700 MB/s |

| 2 x 10 Gbps | 1.75 GB/s | 1 GB/s | 1.3 GB/s |