About block storage

Acronis Cyber Infrastructure can be used as a block storage backend over iSCSI. Block storage is optimized for data that must be frequently accessed and edited. It is ideal for hot data and virtual machines.

Block storage enables managing data as blocks, as opposed to files in file systems or objects in S3 storage. Those blocks can be stored in different operating systems in a SAN-like manner.

Acronis Cyber Infrastructure allows you to create groups of redundant targets running on different storage nodes. To each target group, you can attach multiple storage volumes with their own redundancy provided by the storage layer. Targets export these volumes as LUNs.

You can create multiple target groups on same nodes. A volume, however, may only be attached to one target group at any moment in time.

Each node in a target group can host a single target for that group. If one of the nodes in a target group fails along with its targets, healthy targets from the same group continue to provide access to the LUNs previously serviced by the failed targets.

You can configure access to the target group by using CHAP or ACL.

Sample block storage

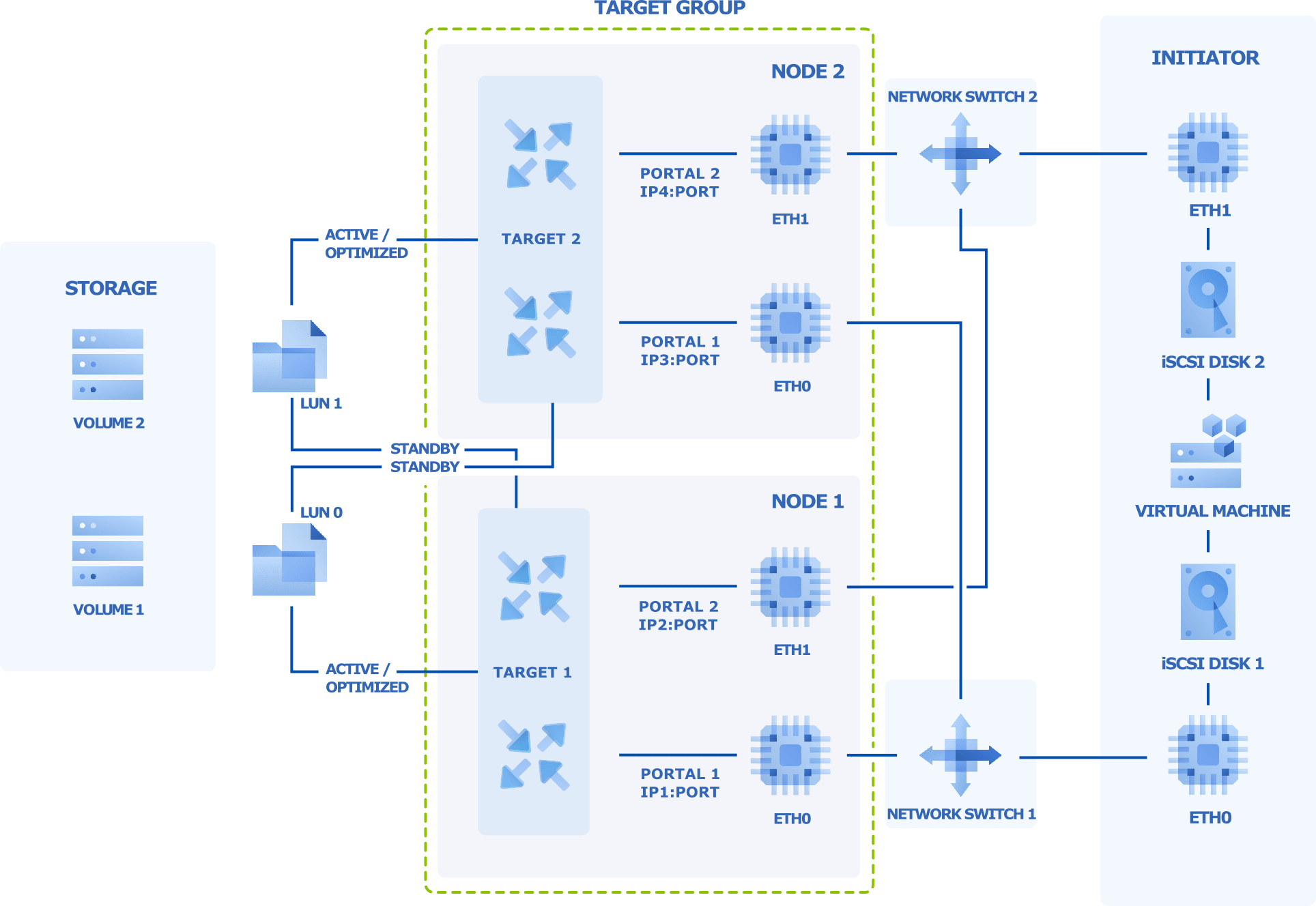

The figure below shows a typical setup for exporting Acronis Cyber Infrastructure disk space via iSCSI.

The figure shows two volumes located on redundant storage provided by Acronis Cyber Infrastructure. The volumes are attached as LUNs to a group of two targets running on Acronis Cyber Infrastructure nodes. Each target has two portals, one per network interface, with the iSCSI traffic type. This makes a total of four discoverable endpoints with different IP addresses. Each target provides access to all LUNs attached to the group.

Targets work in the ALUA mode, so one path to the volume is preferred and considered Active/Optimized while the other is Standby. The Active/Optimized path is normally chosen by the initiator (Explicit ALUA). If the initiator cannot do so (either does not support it or times out), the path is chosen by the storage itself (Implicit ALUA).

Network interfaces eth0 and eth1 on each node are connected to different switches for redundancy. The initiator, for example, VMware ESXi, is connected to both switches as well and provides volumes as iSCSI disks 1 and 2 to a VM via different network paths.

If the Active/Optimized path becomes unavailable for some reason (for example, the node with the target or network switch fails), the Standby path through the other target will be used instead to connect to the volume. When the Active/Optimized path is restored, it will be used again.